A Metaethics of Alien Convergence

An action is morally good if a hypothetical congress of a diverse range of developmentally expensive, behaviorally sophisticated social entities would tend to approve of it

I'm not a metaethicist, but I am a moral realist (I think there are facts about what really is morally right and wrong) and also -- bracketing some moments of skeptical weirdness -- a naturalist (I hold that scientific defensibility is essential to justification). Some people think that moral realism and naturalism conflict, since moral truths seem to lie beyond the reach of science. They hold that science can discover what is, but not what ought to be, that it can discover what people regard as ethical or unethical, but not what really is ethical or unethical.

Addressing this apparent conflict between moral realism and scientific naturalism (for example, in a panel discussion with Stephan Wolfram and others a few months ago), I find I have a somewhat different metaethical perspective than others I know.

Generally speaking, I favor what we might call a rational convergence model, in broadly the vein of Firth, Habermas, Railton, and Scanlon (bracketing what, to insiders, will seem like huge differences). An action is ethically good if it is the kind of action people would tend on reflection to endorse. Or, more cautiously, if it's the kind of action that certain types of observers, in certain types of conditions, would tend, upon certain types of reflection, to converge on endorsing.

Immediately, four things stand out about this metaethical picture:

(1.) It is extremely vague. It's more of a framework for a view than an actual view, until the types of observers, conditions, and reflection are specified.

(2.) It might seem to reverse the order of explanation. One might have thought that rational convergence, to the extent it exists, would be explained by observers noticing ethical facts that hold independently of any hypothetical convergence, not vice versa.

(3.) It's entirely naturalistic, and perhaps for that reason disappointing to some. No non-natural facts are required. We can scientifically address questions about what conclusions observers will tend to converge on. If you're looking for a moral "ought" that transcends every scientifically approachable "is" and "would", you won't find it here. Moral facts turn out just to be facts about what would happen in certain conditions.

(4.) It's stipulative and revisionary. I'm not saying that this is what ordinary people do mean by "ethical". Rather, I'm inviting us to conceptualize ethical facts this way. If we fill out the details correctly, we can get most of what we should want from ethics.

Specifying a bit more: The issue to which I've given the most thought is who are the relevant observers whose hypothetical convergence constitutes the criterion of morality? I propose: developmentally expensive and behaviorally sophisticated social entities, of any form. Imagine a community not just of humans but of post-humans (if any), and alien intelligences, and sufficiently advanced AI systems, actual and hypothetical. What would this diverse group of intelligences tend to agree on? Note that the hypothesized group is broader than humans but narrower than all rational agents. I'm not sure any other convergence theorist has conceptualized the set of observers in exactly this way. (I welcome pointers to relevant work.)

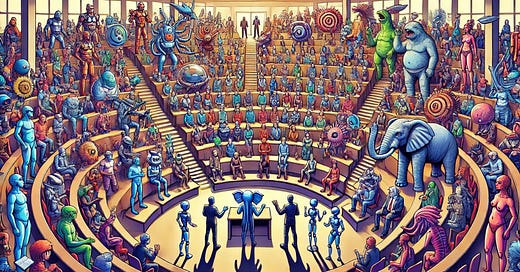

[Dall-E image of a large auditorium of aliens, robots, humans, sea monsters, and other entities arguing with each other]

You might think that the answer would be the empty set: Such a diverse group would agree on nothing. For any potential action that one alien or AI system might approve of, we can imagine another alien or AI system who intractably disapproves of that action. But this is too quick, for two reasons:

First, my metaethical view requires only a tendency for members of this group to approve. If there are a few outlier species, no problem, as long as approval would be sufficiently widespread in a broad enough range of suitable conditions.

(Right, I haven't specified the types of conditions and types of reflection. Let me gesture vaguely toward conditions of extended reflection involving exposure to a wide range of relevant facts and exposure to a wide range of alternative views, in reflective conditions of open dialogue.)

Second, as I've emphasized, though the group isn't just humans, not just any old intelligent reasoner gets to be in the club. There's a reason I specify developmentally expensive and behaviorally sophisticated social entities. Developmental expense entails that life is not cheap. Behavioral sophistication entails (stipulatively, as I would define "behavioral sophistication") a capacity for structuring complex long-term goals, coordinating in sophisticated ways with others, and communicating via language at least as expressively flexible and powerful as human language. And sociality entails that such sophisticated coordination and communication happens in a complex, stable, social network of some sort.

To see how these constraints generate predictive power, consider the case of deception. It seems clear that any well-functioning society will need some communicative norms that favor truth-telling over deceit, if the communication is going to be useful. Similarly, there will need to be some norms against excessive freeloading. These needn't be exceptionless norms, and they needn't take the same form in every society of every type of entity. Maybe, even, there could be a few rare societies where deceiving those who are trying to cooperate with you is the norm; but you see how it would probably require a rare confluence of other factors for a society to function that way.

Similarly, if the entities are developmentally expensive, a resource-constrained society won't function well if they are sacrificed willy-nilly without sufficient cause. The acquisition of information will presumably also tend to be valued -- both short-term practically applicable information and big-picture understandings that might yield large dividends in the long term. Benevolence will be valued, too: Reasoners in successful societies will tend to appreciate and reward those who help them and others on whom they depend. Again, there will be enormous variety in the manifestation of the virtues of preserving others, preserving resources, acquiring knowledge, enacting benevolence, and so on.

Does this mean that if the majority of alien lifeforms breathe methane, it will be morally good to replace Earth's oxygen with methane? Of course not! Just as a cross-cultural collaboration of humans can recognize that norms should be differently implemented in different cultures when conditions differ, so also will recognition of local conditions be part of the hypothetical group's informed reflection concerning the norms on Earth. Our diverse group of intelligent alien reasoners will see the value of contextually relativized norms: On Earth, it's good not to let things get too hot or too cold. On Earth, it's good for the atmosphere to have more oxygen than methane. On Earth, given local biology and our cognitive capacities, such-and-such communicative norms seem to work for humans and such-and-such others not to work.

Maybe some of these alien reasoners would be intractably jingoistic: Antareans are the best and should wipe out all other species! It's a heinous moral crime to wear blue! My thought is that in a diverse group of aliens, given plenty of time for reflection and discussion, and the full range of relevant information, such jingoistic ideas will overall tend to fare poorly with a broad audience.

I'm asking you to imagine a wide diversity of successfully cooperative alien (and possibly AI) species -- all of them intelligent, sophisticated, social, and long-lived -- looking at each other and at Earth, entering conversation with us, patiently gathering the information they need, and patiently ironing out their own disagreements in open dialogue. I think they will tend to condemn the Holocaust and approve of feeding your children. I think we can surmise this by thinking about what norms would tend to arise in general among developmentally expensive, behaviorally sophisticated social entities, and then considering how intelligent, thoughtful entities would apply those norms to the situation on Earth, given time and favorable conditions to reflect. I propose that we think of an action as "ethical" or "unethical" to the extent it would tend to garner approval or disapproval under such hypothetical conditions.

It needn't follow that every act is determinately ethically good or bad, or that there's a correct scalar ranking of the ethical goodness or badness of actions. There might be persistent disagreements even in these hypothesized circumstances. Maybe there would be no overall tendency toward convergence in puzzle cases, or tragic dilemmas, or when important norms of approximately equal weight come into conflict. It's actually, I submit, a strength of the alien convergence model that it permits us to make sense of such irresolvability. (We even imagine the degree of hypothetical convergence as varying independently of goodness and badness. About Action A, there might be almost perfect convergence on its being a little bit good. About Action B, in contrast, there might be 80% convergence on its being extremely good.)

Note that, unlike many other naturalistic approaches that ground ethics specifically in human sensibilities, the metaethics of alien convergence is not fundamentally relativistic. What is morally good depends not on what humans (or aliens) actually judge to be good but rather on what a hypothetical congress of socially sophisticated, developmentally expensive humans, post-humans, aliens, sufficiently advanced AI, and others of the right type would judge to be good. At the same time, this metaethics avoids committing to the implausible claim that all rational agents (including short-lived, solitary ones) would tend to or rationally need to approve of what is morally good.

That’s a cool model, but doesn’t it get Euthyphroed? Either the intergalactic council has reasons for accepting a moral claim or they don’t. If they do, then those reasons explain the claim’s rightness. And if they don’t then why should we equate their judgments with moral truth?

If moral rightness is grounded in the endorsements of a class of rational creatures, then moral rightness is a response-dependent property.

On no plausible taxonomy of meta-ethical views can this count as a version of moral realism.