How the Mimicry Argument Against Robot Consciousness Works

A few months ago on this blog, I presented a "Mimicry Argument" against robot consciousness -- or more precisely, an argument that aims to show why it's reasonable to doubt the consciousness of an AI that is built to mimic superficial features of human behavior. Since then, my collaborator Jeremy Pober and I have presented this material to philosophy audiences in Sydney, Hamburg, Lisbon, Oxford, Krakow, and New York, and our thinking has advanced.

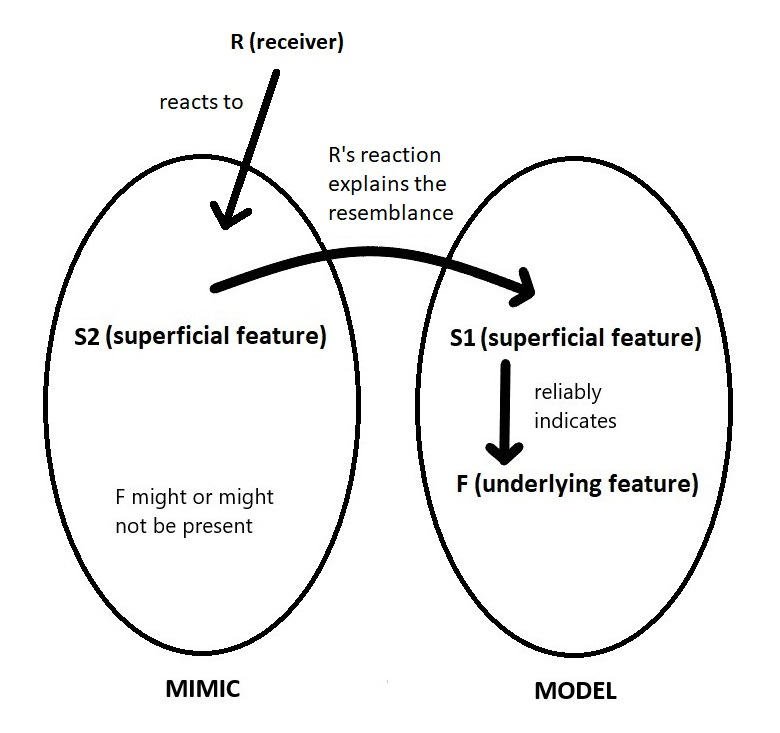

Our account of mimicry draws on work on mimicry in evolutionary biology. On our account, a mimic is an entity:

with a superficial feature (S2) that is selected or designed to resemble a superficial feature (S1) of some model entity

for the sake of deceiving, delighting, or otherwise provoking a particular reaction in some particular audience or "receiver"

because the receiver treats S1 in the model entity as an indicator of some underlying feature F.

Viceroy butterflies have wing coloration patterns (S2) that resemble the wing color patterns (S1) of monarch butterflies for the sake of misleading predators who treat S1 as an indicator of toxicity. Parrots emit songs that resemble the songs or speech of other birds or human caretakers for social advantage. If the receiver is another parrot, the song in the model (but not necessarily the mimic) indicates group membership. If the receiver is a human, the speech in the model (but not necessarily the mimic) indicates linguistic understanding. As the parrot case illustrates, not all mimicry needs to be deceptive, and the mimic might or might not possess the feature the receiver attributes.

Here's the idea in a figure:

Pober and I define a "consciousness mimic" as an entity whose S2 resembles an S1 that, in the model entity, normally indicates consciousness. So, for example, a toy which says "hello" when powered on is a consciousness mimic: For the sake of a receiver (a child), it has a superficial feature (S2, the sound "hello" from its speakers) which resembles a superficial feature (S1) in an English speaking human which normally indicates consciousness (since humans who say "hello" are normally conscious).

Arguably, Large Language Models like ChatGPT are consciousness mimics in this sense. They emit strings of text modeled on human-produced text for the sake of users who interpret that text as having semantic content of the same sort such text normally does when emitted by conscious humans.

Now, if something is a consciousness mimic, we can't straightforwardly infer its consciousness from its possession of S2 in the same way we can normally infer the model's consciousness from its presence in S1. The "hello" toy isn't conscious. And if ChatGPT is conscious, that will require substantial argument to establish; it can't be inferred in the same ready way that we infer consciousness in a human from human utterances.

Let me attempt to formalize this a bit:

(1.) A system is a consciousness mimic if:

a. It possesses superficial features (S2) that resemble the superficial features (S1) of a model entity.

b. In the model entity, the possession of S1 normally indicates consciousness.

c. The best explanation of why the mimic possesses S2 is the mimicry relationship described above.

(2.) Robots or AI systems – at least an important class of them – are consciousness mimics in this sense.

(3.) Because of (1c), if a system is a consciousness mimic, inference to the best explanation does not permit inferring consciousness from its possession of S2.

(4.) Some other argument might justify attributing consciousness to the mimic; but if the mimic is a robot or AI system, any such argument, for the foreseeable future, will be highly contentious.

(5.) Therefore, we are not justified in attributing consciousness to the mimic.

AI systems designed with outputs that look human might understandably tempt users to attribute consciousness based on those superficial features, but we should be cautious about such attributions. The inner workings of Large Language Models and other AI systems are causally complex and designed to generate outputs that look like the types of outputs humans produce, for the sake of being interpretable by humans; but not all causal complexity implies consciousness and the superficial resemblance to the behaviorally sophisticated patterns we associate with consciousness could be misleading if such patterns could potentially arise without the presence of consciousness.

The main claim is intended to be weak and uncontroversial: When the mimicry structure is present, significant further argument is required before attributing consciousness to an AI system based on superficial features suggestive of consciousness.

Friends of robot or AI consciousness may note two routes by which to escape the Mimicry Argument. They might argue, contra premise (2), that some important target types of artificial systems are not consciousness mimics. Or they might present an argument that the target system, despite being a consciousness mimic, is also genuinely conscious – an argument they believe is uncontentious (contra 4) or that justifies attributing consciousness despite being contentious (contra the inference from 4 to 5).

The Mimicry Argument is not meant to apply universally to all robots and AI systems. Its value, rather, is to clarify the assumptions implicit in arguments against AI consciousness on the grounds that AI systems merely mimic the superficial signs of consciousness. We can then better see both the merits of that type of argument and means of resisting it.

Another wonderful piece.

Your point could even apply to some organic, let's go with semi-sentient, mimics.

For instance, I (S2) could recite your piece (S1) word for word. Yet, it's meaning and the ability to create it (F) are far beyond me.

This makes me wonder if babies count as consciousness mimics. For a long time, they’re engaged in mimicry that doesn’t understand the underlying meaning of their actions. Ironically, that makes the idea of emergent consciousness from mimicry seem *more* plausible to me.

Perhaps combining this with some Douglas Hofstadter loopiness? Maybe my mind is a conceptual network that uses previous thoughts as prompts to “predict and generate” my next thought.

I hope this is vaguely coherent. I’m probably out of my depth.