The Leapfrog Hypothesis for AI Consciousness and Rights

The first conscious AI systems might have complex rather than simple conscious intelligence

The first genuinely conscious robot or AI system would, you might think, have relatively simple consciousness -- insect-like consciousness, or jellyfish-like, or frog-like -- rather than the rich complexity of human-level consciousness. It might have vague feelings of dark vs light, the to-be-sought and to-be-avoided, broad internal rumblings, and not much else -- not, for example, complex conscious thoughts about ironies of Hamlet, or multi-part long-term plans about how to form a tax-exempt religious organization. The simple usually precedes the complex. Building a conscious insect-like entity seems a lower technological bar than building a more complex consciousness.

Until recently, that's what I had assumed (in keeping with Basl 2013 and Basl 2014, for example). Now I'm not so sure.

[Dall-E image of a high-tech frog on a lily pad]

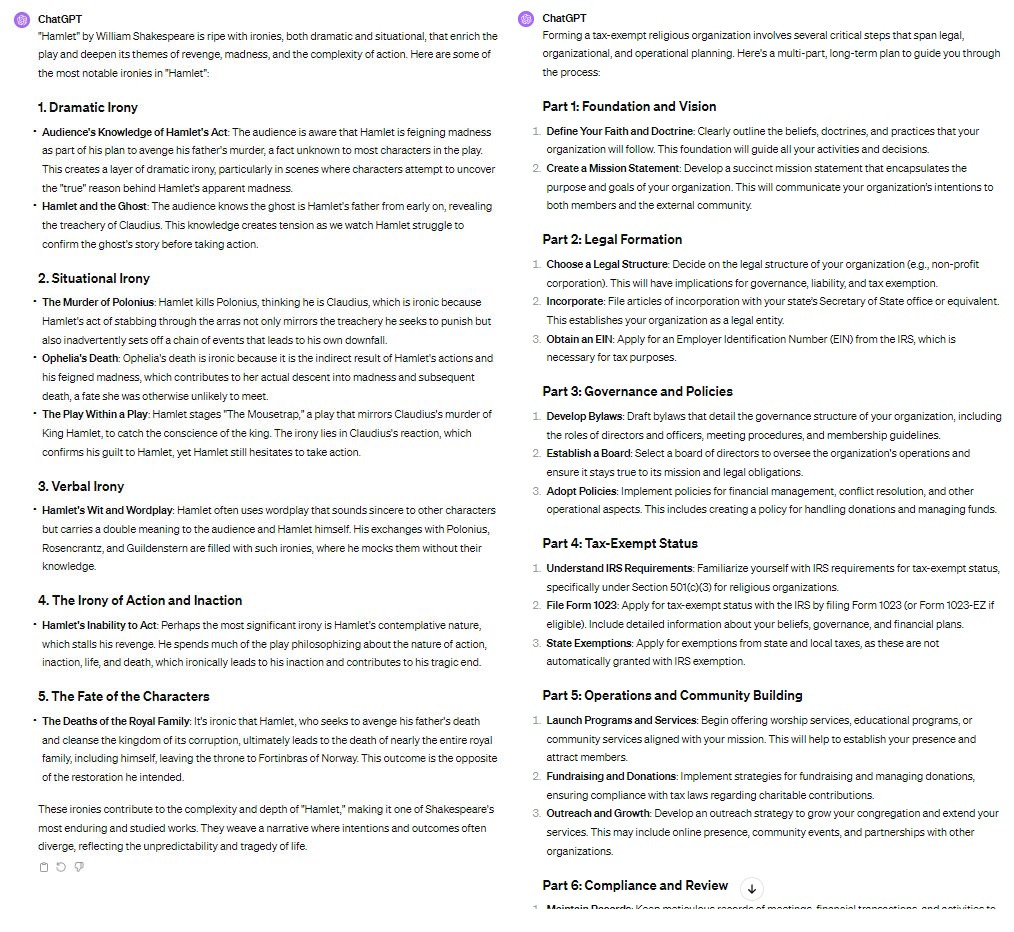

AI systems are -- presumably! -- not yet meaningfully conscious, not yet sentient, not yet capable of feeling genuine pleasure or pain or having genuine sensory experiences. Robotic eyes "see" but they don't yet see, not like a frog sees. However, they do already far exceed all non-human animals in their capacity to explain the ironies of Hamlet and plan the formation of federally tax-exempt organizations. (Put the "explain" and "plan" in scare quotes, if you like.) For example:

[ChatGPT-4 outputs for "Describe the ironies of Hamlet" and "Devise a multi-part long term plan about how to form a tax-exempt religious organization"]

Let's see a frog try that!

Consider, then the Leapfrog Hypothesis: The first conscious AI systems will have rich and complex conscious intelligence, rather than simple conscious intelligence. AI consciousness development will, so to speak, leap right over the frogs, going straight from non-conscious to richly endowed with complex conscious intelligence.

What would it take for the Leapfrog Hypothesis to be true?

First, engineers would have to find it harder to create a genuinely conscious AI system than to create rich and complex representations or intelligent behavioral capacities that are not conscious.

And second, once a genuinely conscious system is created, it would have to be relatively easy thereafter to plug in the pre-existing, already developed complex representations or intelligent behavioral capacities in such a way that they belong to the stream of conscious experience in the new genuinely conscious system. Both of these assumptions seem at least moderately plausible, in these post-GPT days.

Regarding the first assumption: Yes, I know GPT isn't perfect and makes some surprising commonsense mistakes. We're not at genuine artificial general intelligence (AGI) yet -- just a lot closer than I would have guessed in 2018. "Richness" and "complexity" are challenging to quantify (Integrated Information Theory is one attempt). Quite possibly, properly understood, there's currently less richness and complexity in deep learning systems and large language models than it superficially seems. Still, their sensitivity to nuance and detail in the inputs and the structure of their outputs bespeaks complexity far exceeding, at least, light-vs-dark or to-be-sought-vs-to-be-avoided.

Regarding the second assumption, consider a cartoon example, inspired by Global Workspace theories of consciousness. Suppose that, to be conscious, an AI system must have input (perceptual) modules, output (behavioral) modules, side processors for specific cognitive tasks, long- and short-term memory stores, nested goal architectures, and between all of them a "global workspace" which receives selected ("attended") inputs from most or all of the various modules. These attentional targets become centrally available representations, accessible by most or all of the modules. Possibly, for genuine consciousness, the global workspace must have certain further features, such as recurrent processing in tight temporal synchrony. We arguably haven't yet designed a functioning AI system that works exactly along these lines -- but for the sake of this example let's suppose that once we create a good enough version of this architecture, the system is genuinely conscious.

But now, as soon as we have such a system, it might not be difficult to hook it up to a large language model like GPT-7 (GPT-8? GPT-14?) and to provide it with complex input representations full of rich sensory detail. The lights turn on... and as soon as they turn on, we have conscious descriptions of the ironies of Hamlet, richly detailed conscious pictorial or visual inputs, and multi-layered conscious plans. Evidently, we've overleapt the frog.

Of course, Global Workspace Theory might not be the right theory of consciousness. Or my description above might not be the best instantiation of it. But the thought plausibly generalizes to a wide range of functionalist or computationalist architectures: The technological challenge is in creating any consciousness at all in an AI system, and once this challenge is met, giving the system rich sensory and cognitive capacities, far exceeding that of a frog, might be the easy part.

Do I underestimate frogs? Bodily tasks like five-finger grasping and locomotion over uneven surfaces have proven to be technologically daunting (though we're making progress). Maybe the embodied intelligence of a frog or bee is vastly more complex and intelligent than the seemingly complex, intelligent linguistic outputs of a large language model.

Sure thing -- but this doesn't undermine my central thought. In fact, it might buttress it. If consciousness requires frog- or bee-like embodied intelligence -- maybe even biological processes very different from what we can now create in silicon chips -- artificial consciousness might be a long way off. But then we have even longer to prepare the part that seems more distinctively human. We get our conscious AI bee and then plug in GPT-28 instead of GPT-7, plug in a highly advanced radar/lidar system, a 22nd-century voice-to-text system, and so on. As soon as that bee lights up, it lights up big!

I agree with the idea that consciousness won’t emerge gradually in the same way it did in animals. But I don’t think the idea presented here of consciousness as an “add-on” is necessarily right. I’ll suggest two alternatives: (1) Consciousness is an artifact of the imperfections in our mental architecture. I don’t know that Dennett puts it quite that way, but this seems like a reasonable interpretation of Dennett’s “box of tricks” description of how consciousness comes about. (2) Consciousness emerges from desires rather than cognition: awareness of self is caused by a constant monitoring of how distant the self is from some objective, in order to better achieve that objective.

The implications of these two alternate views might be: machines never become conscious because they lack our computational imperfections; machines never become conscious because they are never created with goals, only programming; machines become situationally conscious when their programming gives them an objective; machine consciousness exists but is fundamentally different from ours, because the imperfections in their computational architecture are very different; etc.

There are different schools of thought as you well know. What is consciousness? Is it only manifested in the much ado about nothing of human existence? You can make a pretty good case that the frog is more in tune with its consciousness never needing to think of its existence then we will ever be.

Machine sentience will come with thunder and as a whimper and everything in between and ways we cannot conceive of with our limited perception of consciousness.

What a great subject for endless thought exercise.